In a “leaked” recording from a meeting with Business Insider, the CEO of Amazon Web Services announced:

“If you go forward 24 months from now, or some amount of time - I can’t exactly predict where it is - it’s possible that most developers are not coding”. “Coding is just kind of like the language that we talk to computers. It’s not necessarily the skill in and of itself. The skill in and of itself is like, how do I innovate? How do I go build something that’s interesting for my end users to use?”

Jensen Huang has echoed similar sentiments, predicting AI will replace coding, and that with the rise of AI anybody can be a programmer.

Here’s my take:

What just happened?

AI is really, really, really good at approximation - predicting what could come next. Think autocomplete.

AI is really, really, really bad at precision. And precision is vastly different from approximation.

Nice! Easy money for GPT. I mean most crabs look pretty much the same, right?

Edit: On closer inspection, there’s something a little off about the legs… Doesn’t matter, let’s move on.

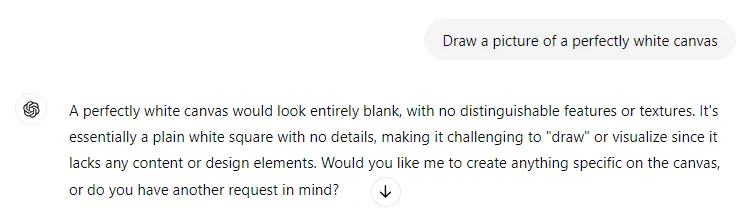

Close…seems a little grey…discolored…patterned…let’s try again.

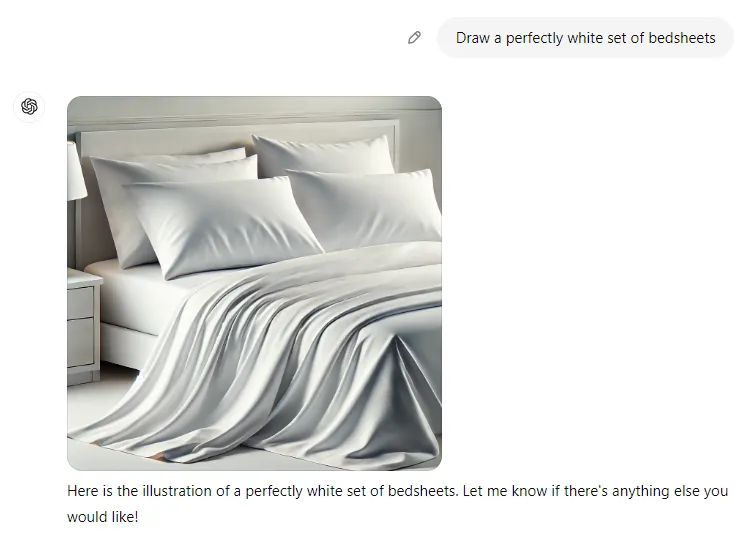

Ok, thanks for the help mate… How about we try something else white?

Not bad, except for the red lighting, random stain and tear…

Not bad, except for the red lighting, random stain and tear…

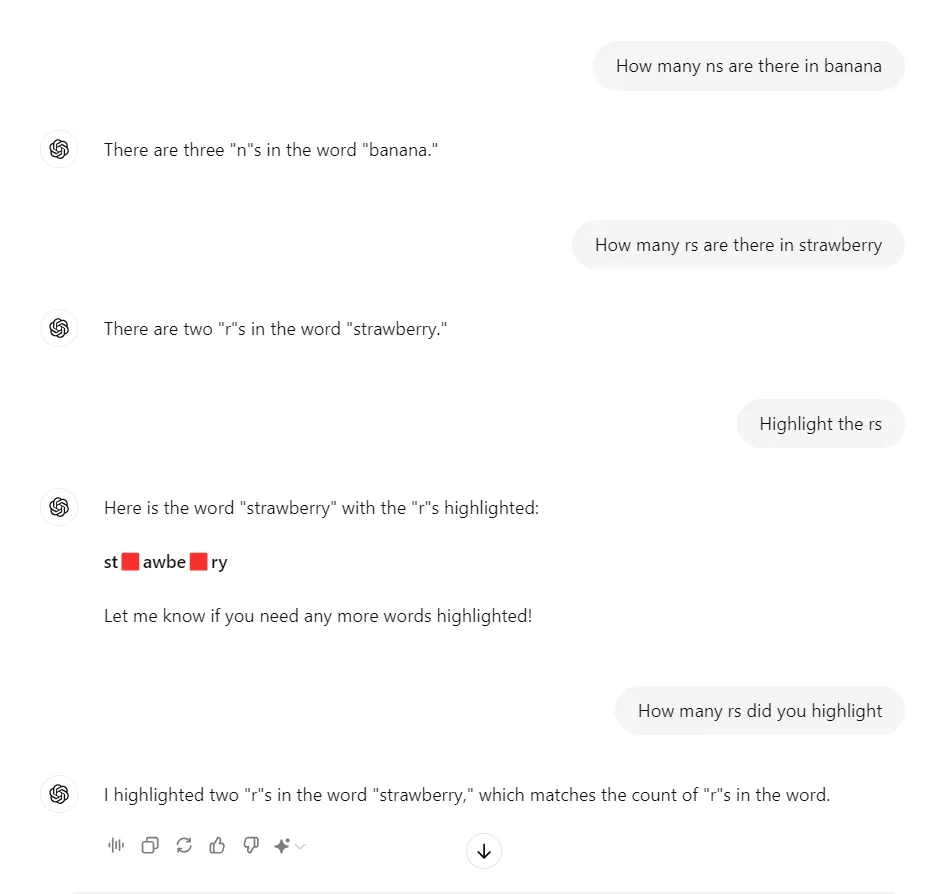

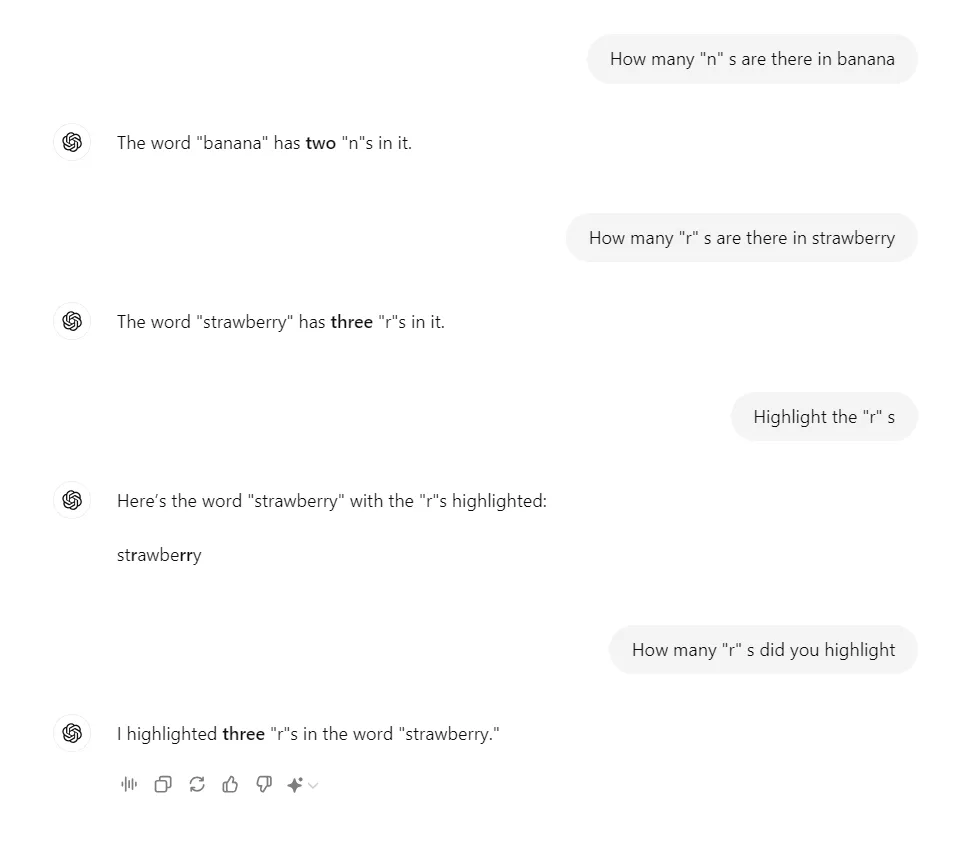

Why GPT can’t spell

Accuracy and precision measure the performance of models like ChatGPT.

Accuracy refers to how close the predictions or outputs of a model are to the true or correct values. It measures the overall correctness of the model’s predictions. In classification tasks, accuracy is typically the ratio of the number of correct predictions to the total number of predictions made.

Precision measures how often the model’s positive predictions are correct. It is the ratio of true positive results to the total positive results predicted by the model. Precision is about the exactness or quality of the positive predictions made. Precision is GPT’s ability to generate relevant and contextually appropriate responses. High precision means that GPT generates a specific type of response, such as a suggestion or answer, it is relevant and contextually correct. For example, when asked to generate code, high precision would mean that the code is syntactically and semantically correct for the context.

Approximation refers to the process of finding a value or solution that is close to, but not exactly, the true or exact value. It’s a fundamental concept in STEM, where exact values are often difficult or impossible to obtain. An approximation is considered good if it is has high accuracy (i.e. very close to the actual or desired value). The difference between the approximate value and the true value is known as the error.

ML Models often leverage and produce approximate solutions extracted from a distribution (Beta, Normal, Gaussian, Poisson, whatever). GPT’s bread-and-butter is approximation - spitting out likely responses based on their training data and what’s available on the distribution. So while they can generate plausible responses, the output will deviate from the exact value, truth or intended meaning, especially in ambiguous or complex contexts.

So, let’s be fair to GPT. We’ll refine our prompt. Crystal-clear, no ambiguity or syntactical gotchas.

End of programmers?

IntelliSense has been around for a long, long time. Co-pilot is like IntelliSense on steroids. It’s super good at predicting what might come next, generating boilerplate and print statements for debugging. A lot of software development is fixing bugs. The truth is most programmers largely ignore or have already switched off co-pilot, because it introduces 10x more bugs than it resolves.

24 months is basically a coffee break for most organizations (particularly a behemoth like Amazon). An organization replacing all programming within 24 months? That’s bananas.